Relatório de Testes do Playwright: O Guia Completo para Relatórios e Análise de Testes

Generating effective Playwright test reports is essential for understanding your test automation results.

You've written comprehensive Playwright tests. You've configured your CI/CD pipeline. You're running tests across multiple browsers and environments. But when a test fails at 2 AM, can you quickly understand what went wrong? Can you spot flaky tests before they slow your pipeline? Do you have real-time visibility into your test suite's health?

Effective test reporting is what transforms raw test execution into actionable insights.

Playwright has become the go-to framework for end-to-end testing, offering powerful browser automation capabilities across Chromium, Firefox, and WebKit. But running tests is only half the battle; understanding what happened, why tests failed, and how your test suite performs over time requires effective Playwright reporting and comprehensive Playwright test results analysis. Without proper reporting, you're flying blind. You might know that 3 tests failed, but you don't know:

- Why they failed (without digging through logs)

- If they're consistently failing (or just flaky)

- How your test suite is trending (getting better or worse)

- What to prioritize (which failures matter most)

This comprehensive guide covers everything you need to know about Playwright test reports: from built-in reporters to advanced reporting solutions that help teams scale their testing efforts. Whether you're debugging a single failure or managing a test suite with thousands of tests, you'll learn how to extract maximum value from your test results. By the end of this guide, you'll know:

- How to choose the right reporter for your needs

- How to configure and customize Playwright reports

- When to upgrade from built-in to advanced reporting solutions

- Best practices for effective test reporting

- How to solve common reporting challenges

Table of Contents

- What is a Playwright Test Report?

- Why Test Reports Matter

- Built-in Playwright Reporters

- Understanding Playwright Trace Viewer

- Limitations of Playwright's Built-in Reports

- CI/CD Reporting Challenges

- What Modern Teams Expect from Test Reports

- Creating Custom Reporters in Playwright

- How Enhanced Reporting Helps: A Practical Look

- Introducing NeetoPlaydash: Modern Playwright Test Reporting

- Common Playwright Reporting Challenges and Solutions

- Choosing the Right Reporting Strategy

- Best Practices for Playwright Test Reporting

- Conclusion

- Frequently Asked Questions

What is a Playwright Test Report?

A Playwright test report is a structured output that documents the results of your automated test executions. At its core, a test report answers three fundamental questions:

- What passed? Which tests executed successfully and validated expected behavior

- What failed? Which tests encountered issues, along with error details

- What happened? The execution context, timing, and artifacts that help understand results

A typical Playwright test report includes:

- Test outcomes: Pass, fail, skip, or flaky status for each test

- Execution metadata: Duration, browser type, retry count, and environment details

- Error information: Stack traces, error messages, and failure screenshots

- Artifacts: Screenshots, videos, and trace files captured during execution

Test reports serve different audiences. Developers need detailed error information for debugging. QA leads need aggregate metrics to assess release readiness. Engineering managers need trends to track quality over time.

Why Test Reports Matter

Before diving into specific reporters, it's worth understanding the value effective test reporting provides:

- Faster debugging: Detailed traces and screenshots help pinpoint issues in seconds rather than hours

- Improved team collaboration: Shareable reports enable transparent communication across teams

- Data-driven decisions: Test metrics guide prioritization, resource allocation, and release timing

- Better test management: Visual results help identify coverage gaps and redundant tests

- Early problem detection: Catching issues in testing is far cheaper than fixing them in production

- Historical performance tracking: Trends reveal whether your application quality is improving or declining

Built-in Playwright Reporters

Playwright ships with several built-in reporters, each designed for specific use cases. You can configure them in your playwright.config.ts file. For complete documentation on reporters, see the official Playwright reporters guide.

List Reporter

The list reporter is Playwright's default choice. It prints a hierarchical view of test results as they complete, showing a green checkmark (✓) for passed tests, red cross (✗) for failures, and dash (-) for skipped tests.

Command line:

npx playwright test --reporter=list

Configuration file:

// playwright.config.ts

export default defineConfig({

reporter: 'list',

});

Output:

Running 24 tests using 4 workers

✓ auth/login.spec.ts:12:5 › should login with valid credentials (2.1s)

✓ auth/login.spec.ts:28:5 › should show error for invalid password (1.8s)

✗ cart/checkout.spec.ts:45:5 › should complete purchase (15.2s)

Error: Expected "Order confirmed" but got "Payment failed"

- cart/wishlist.spec.ts:12:5 › should save items (skipped)

Best for: Local development where you need immediate feedback on test progress. For failed tests, the error message appears directly in the output, speeding up debugging.

Dot Reporter

The dot reporter is minimalist, showing a single character per test, ideal for quickly gauging overall test suite health:

export default defineConfig({

reporter: 'dot',

});

Output uses these symbols:

| Symbol | Meaning |

|---|---|

. | Passed |

F | Failed |

× | Failed or timed out (will be retried) |

± | Passed on retry (flaky) |

T | Timed out |

° | Skipped |

Example output:

Running 124 tests using 6 workers

······F·······································F··°····×·±·

You can also run from the command line:

npx playwright test --reporter=dot

Best for: CI environments where you want compact output, especially with large test suites. The flaky indicator (±) is particularly useful for spotting intermittent failures.

Line Reporter

The line reporter updates a single line in the terminal dynamically, showing progress without scrolling output. It's perfect for large test suites where you want minimal noise but still see failures immediately.

Command line:

npx playwright test --reporter=line

Configuration file:

export default defineConfig({

reporter: 'line',

});

Output (updates in place):

[23/150] Running: checkout.spec.ts:45 should complete purchase

When a test fails, the line reporter outputs the error details so you don't miss issues:

[24/150] ✗ checkout.spec.ts:45 should complete purchase

Error: Expected "success" but received "failed"

Best for: Environments with limited terminal space, CI logs where you want compact output, or large test suites where list output would be overwhelming.

JSON Reporter

The JSON reporter outputs machine-readable results, ideal for custom tooling, external analytics platforms, and CI/CD integrations.

Command line:

npx playwright test --reporter=json

# Save to file using environment variable

PLAYWRIGHT_JSON_OUTPUT_NAME=results.json npx playwright test --reporter=json

Configuration file:

export default defineConfig({

reporter: [['json', { outputFile: 'results.json' }]],

});

Output structure:

{

"config": { ... },

"suites": [

{

"title": "Authentication",

"file": "auth/login.spec.ts",

"specs": [

{

"title": "should login with valid credentials",

"ok": true,

"tests": [

{

"status": "passed",

"duration": 2100,

"annotations": [],

"attachments": []

}

]

}

]

}

]

}

The JSON file contains complete test metadata, including projects, browser information, test steps, errors, and attachment paths, everything needed to build custom reporting.

Best for: Integrating Playwright results with custom dashboards, databases, analytics tools, or reporting systems that consume structured data.

JUnit Reporter

The JUnit reporter generates XML output in the industry-standard JUnit format, compatible with virtually all CI/CD systems.

Command line:

# Output to file using environment variable

PLAYWRIGHT_JUNIT_OUTPUT_NAME=results.xml npx playwright test --reporter=junit

Configuration file:

export default defineConfig({

reporter: [['junit', { outputFile: 'results.xml' }]],

});

Output structure:

<testsuites>

<testsuite name="Authentication" tests="5" failures="1" time="12.5">

<testcase classname="login.spec.ts" name="should login with valid credentials" time="2.1"/>

<testcase classname="login.spec.ts" name="should show error for invalid password" time="1.8">

<failure message="Expected success but got failure">

Stack trace...

</failure>

</testcase>

</testsuite>

</testsuites>

Best for: Integration with Jenkins, Azure DevOps, GitLab CI, CircleCI, and other CI/CD systems that natively parse JUnit XML. Most CI platforms can display JUnit results directly in their UI without additional configuration.

HTML Reporter

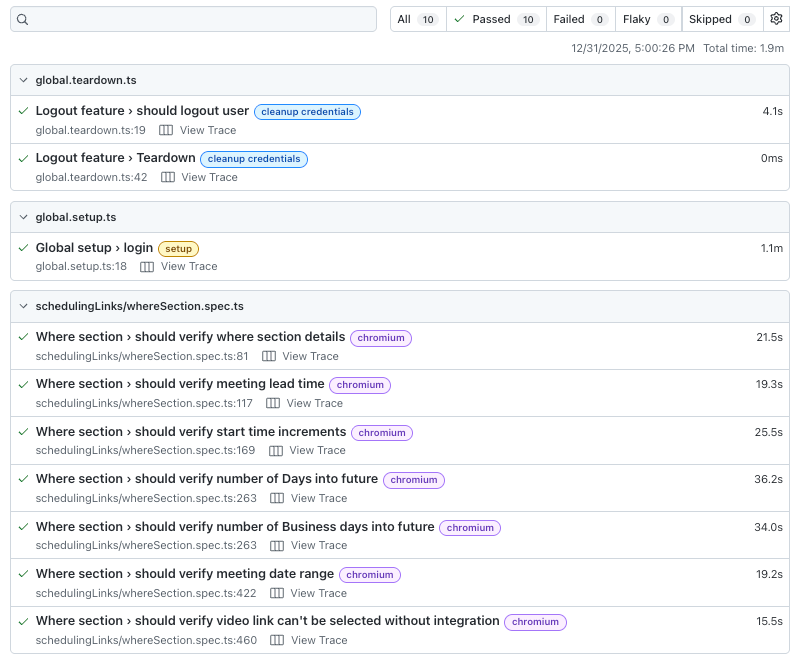

The HTML reporter is Playwright's most feature-rich built-in option, generating an interactive web-based report with comprehensive debugging capabilities.

Command line:

npx playwright test --reporter=html

Configuration file with options:

export default defineConfig({

reporter: [['html', {

outputFolder: 'playwright-report', // Report directory

open: 'never', // 'always' | 'never' | 'on-failure'

host: '0.0.0.0', // Server host for viewing

port: 9223, // Server port

}]],

});

The open option controls automatic browser opening:

'always': Open report after every run'never': Never auto-open (useful in CI)'on-failure': Only open when tests fail (default)

Features include:

- Test filtering: Search by name, status, project, or file

- Detailed test view: Step-by-step execution with timing for each action

- Artifact access: View screenshots, videos, and traces directly in browser

- Retry visibility: See results across multiple retry attempts

- Error inspection: Stack traces with clickable links to source code

- Attachment gallery: Browse all captured screenshots and videos

- Speedboard: Analyze test durations with the speedboard feature to identify slow tests

Viewing the report:

# Open the default report

npx playwright show-report

# Open a report from custom folder

npx playwright show-report my-custom-report

Best for: Manual review of test results, especially when debugging failures. The HTML report provides the richest debugging experience of all built-in reporters.

Blob Reporter

The Blob Reporter produces a special format designed for merging reports from multiple shards. When running tests across multiple CI machines, each shard generates a blob file that can be later combined into a unified report.

Configuration file:

export default defineConfig({

reporter: [['blob', { outputDir: 'blob-report' }]],

});

Command line:

npx playwright test --reporter=blob

Merging blob reports:

After collecting blob files from all shards, merge them into a single HTML report:

npx playwright merge-reports --reporter=html ./blob-report

CI/CD workflow example:

jobs:

test:

strategy:

matrix:

shard: [1, 2, 3, 4]

steps:

- run: npx playwright test --shard=${{ matrix.shard }}/4 --reporter=blob

- uses: actions/upload-artifact@v4

with:

name: blob-report-${{ matrix.shard }}

path: blob-report/

merge-reports:

needs: test

steps:

- uses: actions/download-artifact@v4

with:

pattern: blob-report-*

merge-multiple: true

path: all-blob-reports

- run: npx playwright merge-reports --reporter=html ./all-blob-reports

Best for: Sharded test execution across multiple CI jobs where you need a unified report. The blob format preserves all test data including traces, screenshots, and videos for proper merging.

Combining Multiple Reporters

Playwright supports multiple reporters simultaneously. A common pattern is combining terminal output with file-based reports:

export default defineConfig({

reporter: [

['list'],

['json', { outputFile: 'results.json' }],

['html', { outputFolder: 'playwright-report' }],

],

});

This gives you immediate feedback in the terminal while preserving detailed reports for later analysis.

Comparing Built-in Reporters

| Reporter | Output Format | Best Use Case | Pros | Cons |

|---|---|---|---|---|

| List | Terminal text | Local development | Clear, detailed output per test; easy to read | Can be overwhelming for large test suites |

| Line | Single updating line | Large test suites | Compact; shows progress without clutter | Hard to identify which specific tests failed |

| Dot | Single characters | CI/CD pipelines | Quick visual progress; compact | Difficult to correlate symbols to specific tests |

| HTML | Interactive web page | Debugging and sharing | Rich UI with screenshots, videos, traces | Higher disk usage; requires browser to view |

| JSON | Machine-readable file | Custom tooling | Perfect for automation and integrations | Not human-readable; requires parsing |

| JUnit | XML file | CI/CD integration | Works with Jenkins, GitLab, Azure DevOps | Limited Playwright-specific features |

| Blob | Binary blob files | Sharded execution | Preserves all data for merging; works with CI/CD | Requires merge step to produce final report |

Quick decision guide:

- Debugging locally? → UI Mode (interactive) or HTML Reporter (post-run)

- Running in CI? → JUnit (for CI integration) + JSON (for processing)

- Large test suite? → Dot or Line Reporter for console output

- Sharing with team? → HTML Reporter + upload to shared location

- Sharded/distributed runs? → Blob Reporter for merging results

- Need historical data? → Consider a centralized reporting solution

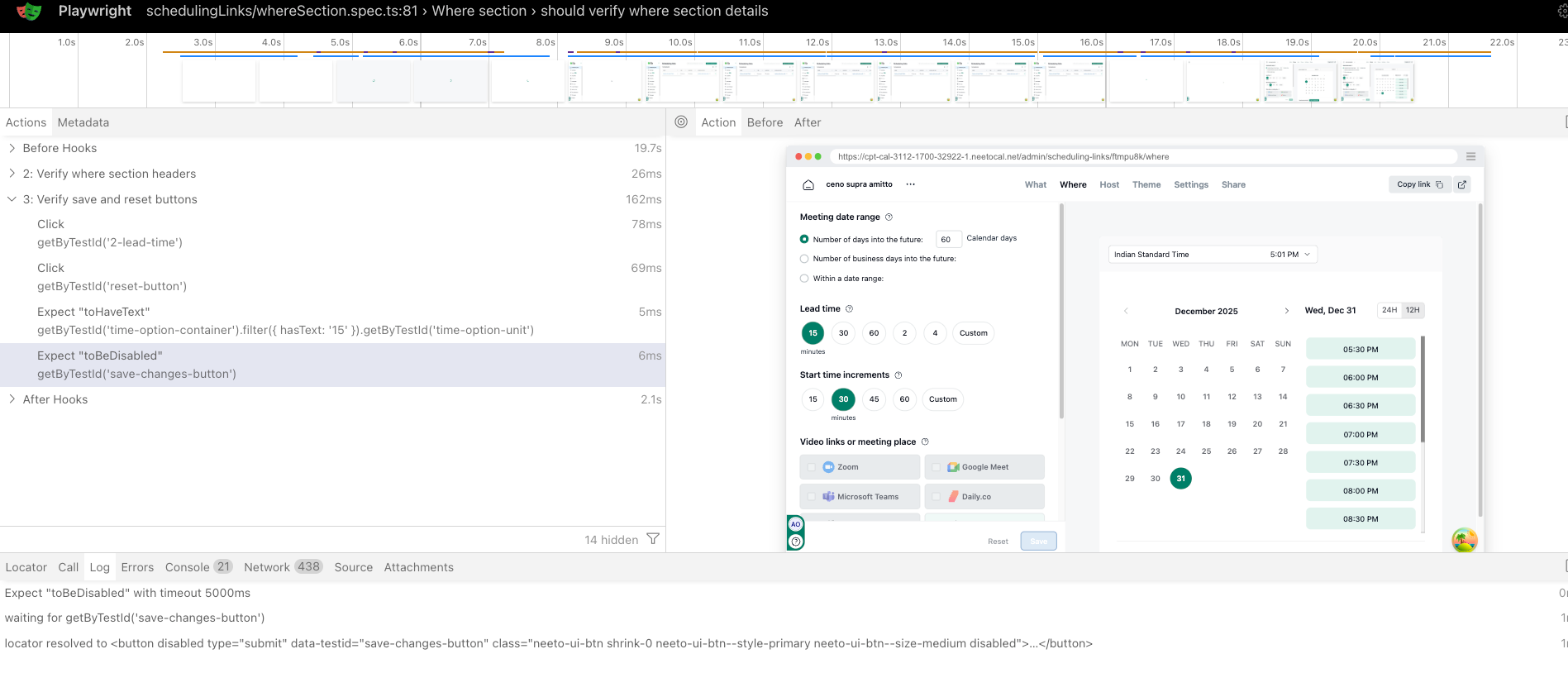

Understanding Playwright Trace Viewer

Beyond standard reports, Playwright's Trace Viewer deserves special attention. It's a powerful debugging tool that records every action during test execution. The Trace Viewer is one of Playwright's most powerful features for debugging test failures.

Enabling Traces

Configure trace collection in your config:

export default defineConfig({

use: {

trace: 'on-first-retry', // Capture traces only on retries

},

});

Trace options include:

'on': Always record traces (higher storage cost)'off': Never record traces'on-first-retry': Record only when a test is retried (recommended)'retain-on-failure': Keep traces only for failed tests'retain-on-first-failure': Record for the first run of each test, but remove traces from successful retries'on-all-retries': Record traces for all retry attempts

What Traces Capture

A trace file contains:

- DOM snapshots: The page state at each action

- Network requests: All API calls with request/response bodies

- Console logs: Browser console output

- Action timeline: Every click, fill, navigation with timing

- Screenshots: Automatic screenshots before each action

Viewing Traces

Open traces locally:

npx playwright show-trace trace.zip

Or use Playwright's online trace viewer:

# Opens trace.playwright.dev in browser

npx playwright show-trace --remote trace.zip

You can also view traces directly in the HTML report by clicking on a test and selecting "Traces".

What You Can Do with Traces

The Trace Viewer provides a powerful debugging experience:

- Step-by-step playback: Walk through each action like a video

- Timeline scrubbing: Jump to any point in the test execution

- Before/After snapshots: See the DOM state before and after each action

- Network panel: Inspect all API calls with request/response bodies

- Console logs: View all browser console output

- Source code view: See which line of test code caused each action

Traces are invaluable for debugging flaky tests—they let you replay exactly what happened during a specific test run, including timing and network conditions that might have caused intermittent failures. Read more to analyze the trace.

Limitations of Playwright's Built-in Reports

While Playwright's built-in reporters cover basic needs, they have significant limitations that become apparent as teams and test suites grow.

No Historical Data

Each test run generates a new report, overwriting the previous one. There's no built-in way to:

- Compare today's results with yesterday's

- Track failure trends over weeks or months

- Identify tests that became flaky over time

- Monitor test suite health across releases

Local-Only Reports

The HTML report is generated as local files. Sharing with team members requires:

- Committing reports to a repository (bloats history)

- Uploading to a file server (manual process)

- Running a local server for remote viewing

There's no native way to send a link to a specific failed test.

No Aggregation Across Runs

When running tests in parallel across multiple CI agents or shards, each shard produces its own report. Playwright doesn't provide built-in tooling to:

- Merge results from parallel shards

- Present a unified view of distributed test runs

- Calculate overall pass rates across workers

Limited Analytics

Built-in reporters show pass/fail status but don't provide:

- Flakiness detection: Identifying tests that intermittently fail

- Duration trends: Spotting tests that are getting slower

- Failure categorization: Grouping failures by root cause

- Performance metrics: Test execution patterns and bottlenecks

No Team Collaboration Features

Modern testing involves multiple stakeholders. Built-in reports lack:

- Commenting on failures

- Assigning investigation tasks

- Notification integrations

- Role-based access control

CI/CD Reporting Challenges

Running Playwright tests in continuous integration environments introduces unique reporting challenges.

Distributed Execution

To speed up test execution, teams often shard tests across multiple CI jobs:

# GitHub Actions example

jobs:

test:

strategy:

matrix:

shard: [1, 2, 3, 4]

steps:

- run: npx playwright test --shard=${{ matrix.shard }}/4

Each shard produces its own report. Without additional tooling, you get four separate HTML reports instead of one unified view.

Artifact Management

CI systems treat test artifacts (screenshots, videos, traces) as ephemeral. You typically need to:

- Upload artifacts to CI storage

- Download them manually for review

- Navigate file structures to find relevant artifacts

This friction slows down failure investigation.

Real-Time Visibility

Most CI workflows show test results only after the entire job completes. For long-running test suites, this means waiting 30+ minutes before learning that a critical test failed in the first minute.

Historical Context

CI runs are transient. Once the pipeline completes, historical context is limited to:

- Pass/fail badge

- Log files (if retained)

- Artifacts (if uploaded)

There's no built-in way to query "How often has this test failed in the last 30 days?"

Pipeline Failures vs Test Failures

Not all red builds are equal. A test might fail due to:

- Actual product bug

- Flaky test behavior

- Infrastructure issues (timeout, network)

- Environment configuration problems

Built-in reports don't help distinguish between these categories.

Setting Up Playwright Reports in GitHub Actions

Here's a complete GitHub Actions workflow that generates and uploads Playwright reports. For more CI/CD integration examples, see Playwright's CI/CD documentation.

name: Playwright Tests

on:

push:

branches: [main]

pull_request:

branches: [main]

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- uses: actions/setup-node@v4

with:

node-version: 20

- name: Install dependencies

run: npm ci

- name: Install Playwright browsers

run: npx playwright install --with-deps

- name: Run Playwright tests

run: npx playwright test

- name: Upload HTML report

uses: actions/upload-artifact@v4

if: always()

with:

name: playwright-report

path: playwright-report/

retention-days: 30

For sharded test execution across multiple jobs:

jobs:

test:

strategy:

fail-fast: false

matrix:

shard: [1, 2, 3, 4]

steps:

- name: Run Playwright tests

run: npx playwright test --shard=${{ matrix.shard }}/4

- name: Upload shard report

uses: actions/upload-artifact@v4

if: always()

with:

name: playwright-report-${{ matrix.shard }}

path: playwright-report/

The challenge with sharding is that you end up with multiple separate reports. Merging them requires additional tooling—which is where centralized reporting solutions become valuable.

What Modern Teams Expect from Test Reports

As testing practices mature, teams develop expectations that go beyond basic pass/fail reporting.

Centralized Test Results

Teams want a single source of truth for test results—one place where anyone can see:

- Current test suite status

- Results from any recent run

- Trends across time periods

- Comparison between branches or environments

This eliminates the "which report do I look at?" problem.

Historical Trend Analysis

Understanding patterns over time is crucial:

- Flakiness trends: Is a test becoming more unreliable?

- Duration trends: Is the test suite getting slower?

- Failure patterns: Do failures cluster around specific times or changes?

- Stability metrics: What's our overall pass rate?

Historical data transforms testing from reactive debugging to proactive quality management.

Flaky Test Detection

Flaky tests—those that sometimes pass and sometimes fail without code changes—are a persistent challenge. Effective reporting should:

- Automatically identify flaky tests based on historical patterns

- Quantify flakiness (e.g., "fails 15% of the time")

- Track whether fixes actually improved stability

- Prioritize the most impactful flaky tests to address

CI/CD Integration

Test results should be accessible where teams already work:

- Pull request comments: See test status without leaving GitHub/GitLab

- Slack notifications: Get alerted on failures immediately

- Dashboard widgets: Monitor quality metrics on team dashboards

- Branch comparisons: Know if a change introduced new failures

Failure Analysis and Debugging

When tests fail, reports should accelerate root cause analysis:

- Error categorization: Group similar failures together

- Quick artifact access: One-click access to screenshots and traces

- Comparison views: See what changed between passing and failing runs

- Stack trace navigation: Jump to relevant code from error reports

Team Collaboration

Testing is a team effort. Reports should support collaboration:

- Comments and annotations: Discuss failures in context

- Ownership assignment: Track who's investigating what

- Status tracking: Mark tests as known issues, in-progress, or fixed

- Shared bookmarks: Save and share links to specific results

Creating Custom Reporters in Playwright

Beyond built-in and third-party reporters, Playwright allows you to create custom reporters tailored to your specific needs. For detailed information on the Reporter API, see Playwright's custom reporter documentation. Custom reporters are useful when you need to:

- Send test results to a proprietary system

- Format output for a specific workflow

- Integrate with tools that don't have existing Playwright support

- Add custom metrics or logging

Building a Custom Reporter

A custom reporter is a JavaScript or TypeScript class that implements specific lifecycle methods. Playwright calls these methods at different stages of test execution:

JavaScript Example:

// my-custom-reporter.js

class MyCustomReporter {

// Called once before running tests

onBegin(config, suite) {

this.startTime = Date.now();

console.log(`Starting test run with ${suite.allTests().length} tests`);

}

// Called when a test starts

onTestBegin(test) {

console.log(`Starting: ${test.title}`);

}

// Called when a test ends (with pass/fail result)

onTestEnd(test, result) {

console.log(`Finished: ${test.title} - ${result.status}`);

console.log(`Duration: ${result.duration}ms`);

// Access error details for failed tests

if (result.status === 'failed') {

console.log(`Error: ${result.error?.message}`);

}

// Access attachments (screenshots, videos, traces)

for (const attachment of result.attachments) {

console.log(`Attachment: ${attachment.name} - ${attachment.path}`);

}

}

// Called when a step starts (for step-level reporting)

onStepBegin(test, result, step) {

console.log(`Step: ${step.title}`);

}

// Called when a step ends

onStepEnd(test, result, step) {

// step.error contains any step-level errors

}

// Called once after all tests complete

onEnd(result) {

const duration = Date.now() - this.startTime;

console.log(`Test run finished in ${duration}ms with status: ${result.status}`);

}

// Called when an error occurs in the reporter itself

onError(error) {

console.error('Reporter error:', error);

}

}

export default MyCustomReporter;

TypeScript Example:

// my-custom-reporter.ts

import type {

FullConfig,

FullResult,

Reporter,

Suite,

TestCase,

TestResult,

TestStep,

} from '@playwright/test/reporter';

class MyCustomReporter implements Reporter {

private startTime: number = 0;

onBegin(config: FullConfig, suite: Suite) {

this.startTime = Date.now();

console.log(`Starting test run with ${suite.allTests().length} tests`);

}

onTestBegin(test: TestCase) {

console.log(`Starting: ${test.title}`);

}

onTestEnd(test: TestCase, result: TestResult) {

console.log(`Finished: ${test.title} - ${result.status}`);

console.log(`Duration: ${result.duration}ms`);

if (result.status === 'failed') {

console.log(`Error: ${result.error?.message}`);

}

for (const attachment of result.attachments) {

console.log(`Attachment: ${attachment.name} - ${attachment.path}`);

}

}

onStepBegin(test: TestCase, result: TestResult, step: TestStep) {

console.log(`Step: ${step.title}`);

}

onStepEnd(test: TestCase, result: TestResult, step: TestStep) {

// step.error contains any step-level errors

}

onEnd(result: FullResult) {

const duration = Date.now() - this.startTime;

console.log(`Test run finished in ${duration}ms with status: ${result.status}`);

}

onError(error: Error) {

console.error('Reporter error:', error);

}

}

export default MyCustomReporter;

Using Your Custom Reporter

Register the custom reporter in your Playwright config:

export default defineConfig({

reporter: [

['./my-custom-reporter.js'],

['html'], // Can combine with built-in reporters

],

});

Practical Custom Reporter Use Cases

Sending results to Slack:

onEnd(result) {

const message = `Test run completed: ${result.status}`;

// Send to Slack webhook

fetch(process.env.SLACK_WEBHOOK, {

method: 'POST',

body: JSON.stringify({ text: message }),

});

}

Logging to a database:

onTestEnd(test, result) {

db.insert('test_results', {

name: test.title,

status: result.status,

duration: result.duration,

timestamp: new Date(),

});

}

Custom reporters give you complete control but require development effort. For most teams, existing reporters or third-party tools provide sufficient functionality without custom code.

How Enhanced Reporting Helps: A Practical Look

Let's examine how modern reporting solutions address common testing pain points, using real-world scenarios.

Scenario 1: Investigating a CI Failure

Without enhanced reporting:

- Notice red build in CI

- Open CI logs, scroll to find failure

- Download artifact zip file

- Extract and open HTML report locally

- Find the failing test, view screenshot

- Download trace file separately

- Open trace viewer

- Repeat for each failure

With enhanced reporting:

- Click notification link

- See all failures with screenshots inline

- Click to view trace in browser

- Share link with teammate

Time saved: 10-15 minutes per investigation.

Scenario 2: Identifying Flaky Tests

Without enhanced reporting:

- Notice test sometimes fails in CI

- Manually search through CI history

- Grep logs for test name

- Tally pass/fail counts in spreadsheet

- Repeat weekly to track progress

With enhanced reporting:

- Open flaky tests dashboard

- See all tests ranked by flakiness

- Click test to see failure pattern

- Track improvement after fixes

What was hours of manual work becomes a 5-minute dashboard check.

Scenario 3: Pre-Release Quality Assessment

Without enhanced reporting:

- Ask QA lead "how are tests looking?"

- QA lead manually checks recent CI runs

- Counts failures, estimates stability

- Provides verbal or Slack summary

With enhanced reporting:

- Open dashboard

- See pass rate trend for release branch

- View comparison with previous release

- Export metrics for stakeholder report

Objective, always-available quality metrics replace subjective assessments.

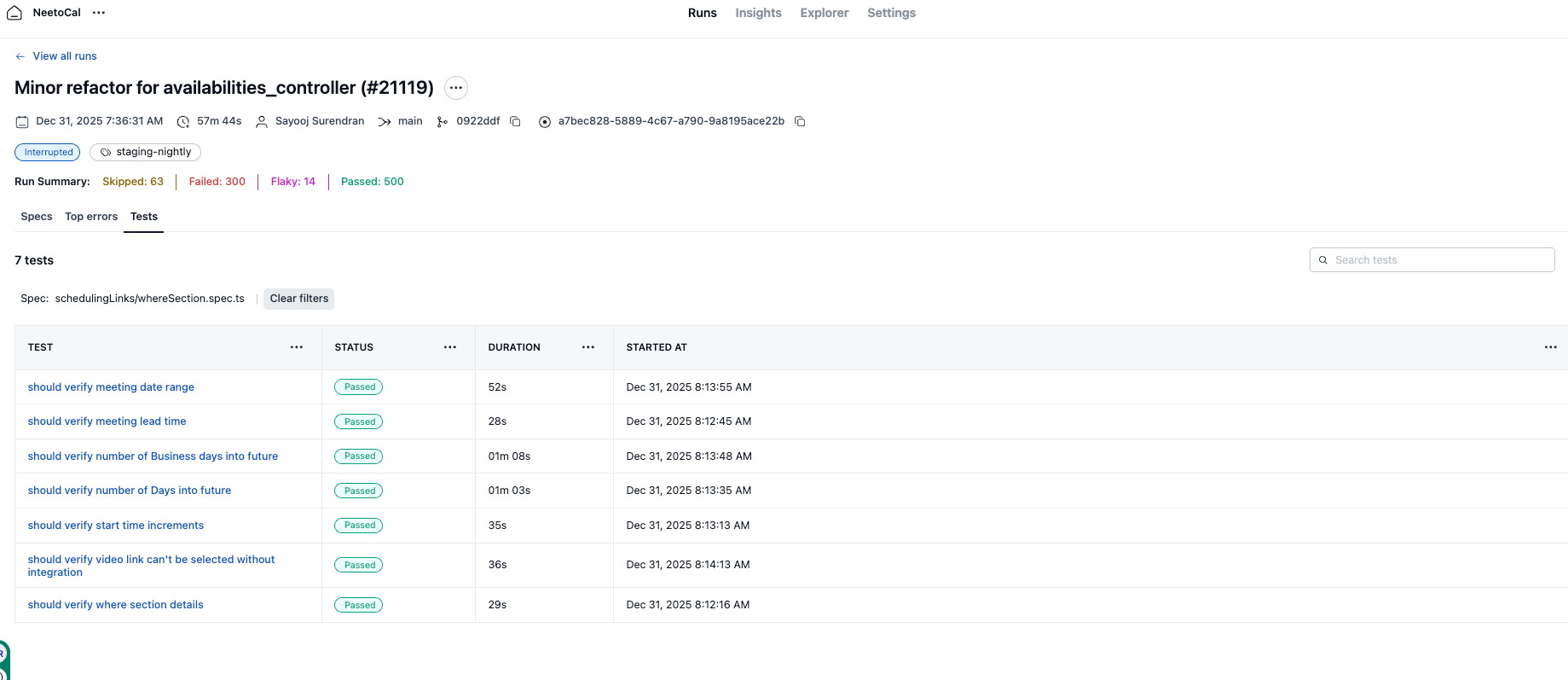

Introducing NeetoPlaydash: Modern Playwright Test Reporting

NeetoPlaydash is a purpose-built reporting solution designed specifically for Playwright tests. It addresses the limitations of built-in reporters while remaining focused and affordable.

Real-Time Test Results

NeetoPlaydash updates as your tests run. Instead of waiting for the entire suite to complete, you see results the moment each test finishes. This enables:

- Immediate failure awareness: Know about problems in seconds, not minutes

- Early abort decisions: Stop a broken build without waiting for all tests

- Live team visibility: Everyone sees the same real-time status

Quick Installation

Getting started takes minutes. Install the NeetoPlaydash reporter package:

npm install @bigbinary/neeto-playwright-reporter

Configure it in your Playwright config:

const neetoPlaywrightReporterConfig = {

/* Use a unique CI Build ID here. Using environment variables ensures each CI run is uniquely identified.

For more details, visit: https://help.neetoplaydash.com/p/a-e8dd98fa */

ciBuildId: process.env.GITHUB_RUN_ID || "local-build-id",

/* Generate an API key and use it here. Store sensitive credentials in environment variables.

For more details, visit: https://help.neetoplaydash.com/p/a-e1c8f8ca */

apiKey: process.env.NEETO_PLAYDASH_API_KEY,

projectKey: "**************",

};

export default defineConfig({

// ...

reporter: [["@bigbinary/neeto-playwright-reporter", neetoPlaywrightReporterConfig ]],

});

The reporter works in both local development and CI environments—same configuration, consistent results.

Centralized Dashboard

All test results flow into a single dashboard. Whether tests run locally, in CI, across multiple branches, or from different team members—everything appears in one place.

Runs Management:

NeetoPlaydash provides comprehensive run management with flexible viewing options:

- Card and Table Views: Switch between visual card layout and detailed table format based on your preference

- Advanced Filtering: Filter runs by:

- Branch name (see results for specific feature branches)

- Run status: Passed, Failed, Flaky, Interrupted, Skipped, or Running

- Author (identify who triggered each run)

- Tags (filter by test categories or environments)

- Date range (analyze trends over specific periods)

- Test counts (find runs with specific pass/fail ratios)

- Error types (quickly locate runs with specific failure patterns)

This eliminates the "scattered reports" problem and gives everyone a shared view of test health. The flexible filtering makes it easy to find exactly what you're looking for, whether you're investigating a specific failure or analyzing trends.

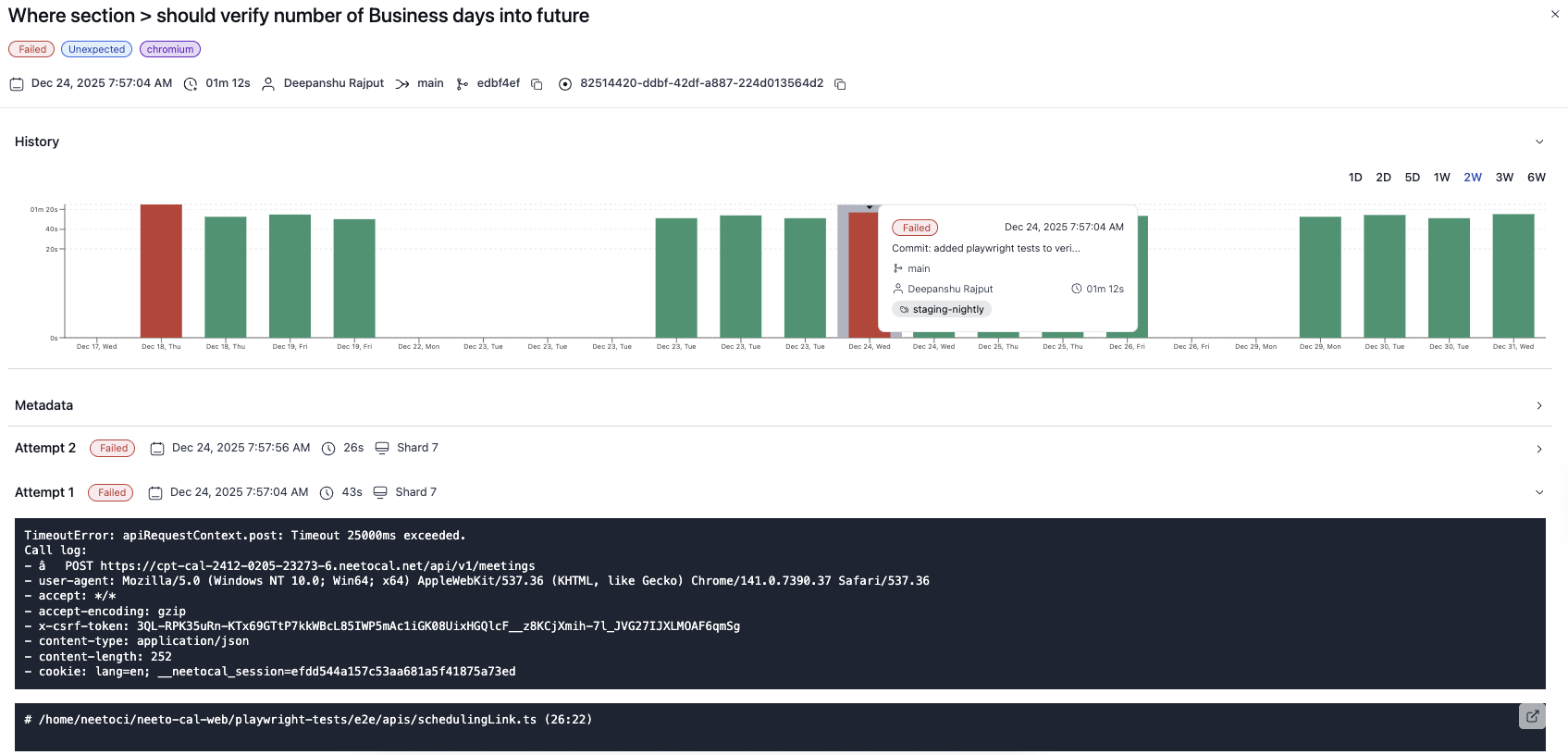

Analytics and Insights

NeetoPlaydash transforms raw test data into actionable insights with comprehensive analytics:

Key Metrics Tracked:

- Runs Status: Monitor overall test run health across all status types—Passed, Failed, Flaky, Interrupted, Skipped, and Running. Track trends and identify patterns in run outcomes over time

- Runs Duration: Track execution time trends to identify performance regressions

- Tests Results: Detailed breakdown of individual test performance over time

- Tests Flakiness: Automatic detection and quantification of flaky tests with historical patterns

Export Capabilities:

All insights are exportable for further analysis or reporting:

- JSON Export: Machine-readable format for custom dashboards or integrations

- CSV Export: Spreadsheet-friendly format for stakeholder reports and data analysis

Whether you need a quick health check or deep analysis, NeetoPlaydash provides the metrics that matter—and makes them easy to share with your team or stakeholders.

Test and Error Explorer

NeetoPlaydash includes powerful exploration tools for deep-dive analysis:

- Tests Explorer: Browse and search through all your tests across runs. View test history, identify patterns, and track individual test performance over time

- Errors Explorer: Centralized error analysis that groups similar failures together, making it easy to identify root causes and track error frequency

The Explorer views help you move beyond individual run analysis to understand your test suite's behavior holistically. Whether you're investigating a recurring failure or analyzing test coverage, the Explorer provides the navigation and filtering you need.

Smart Execution Strategies

Beyond reporting, NeetoPlaydash offers execution features:

- Fail-fast execution: Stop the run early when critical tests fail

- Flexible aggregation: Customize how run status is calculated across shards

- Retry intelligence: Handle retries with clear pass/fail determination

Integrations and Notifications

Test results integrate seamlessly with tools your team already uses:

Platform Integrations:

- GitHub: Status checks, PR comments, and links to relevant test results directly in pull requests

- Slack: Configurable notifications for failures, with direct links to details

- Microsoft Teams: Team notifications and test result summaries in your Teams channels

Webhooks:

For custom integrations or advanced automation, NeetoPlaydash supports webhooks. Configure webhook endpoints to receive real-time notifications about test runs, failures, or other events. This enables integration with any system that accepts HTTP callbacks—from custom dashboards to internal tools.

Email Notifications:

Stay informed even when you're away from your primary tools:

- Notification Preferences: Choose your notification frequency:

- Daily summaries

- Weekly reports

- Monthly overviews

- Customizable Triggers: Configure when you receive emails (failures only, all runs, or specific conditions)

No context switching—stay in your workflow while staying informed about test health, whether through your development tools, chat platforms, or email.

Flexible Configuration and Settings

NeetoPlaydash provides extensive configuration options to match your team's workflow:

Metadata Fields:

Add custom metadata fields to each test run to provide additional context and insights. These are input fields that automation teams and developers fill in to capture important information about a test run without having to dig deep into the run details.

Common use cases include:

- Environment information (staging, production, QA)

- Feature flags

- Custom annotations or notes about the test run

Metadata fields help teams quickly understand the context of a test run at a glance—what environment it ran in, which version was tested, or what changes were included. This contextual information makes it easier to understand test results without navigating through detailed run information.

Notification Settings:

Fine-tune how and when you receive updates:

- Email Notifications: Configure frequency (daily, weekly, monthly) and triggers

- Platform Notifications: Enable or disable notifications per integration (Slack, GitHub, MS Teams)

- Notification Preferences: Set up rules for when notifications are sent (failures only, threshold-based, or all runs)

Integration Management:

Manage all your integrations from a central settings page. Connect or disconnect services, configure notification channels, and set up webhook endpoints—all in one place.

This flexibility ensures NeetoPlaydash adapts to your team's processes rather than forcing you to change how you work.

Affordable Pricing

NeetoPlaydash offers transparent, usage-based pricing:

- Free Plan: Up to 10,000 tests/month per workspace, with unlimited team members

- Pro Plan: $10 per additional 10,000 tests

Unlike enterprise-focused alternatives with per-seat licensing, NeetoPlaydash's pricing scales with actual usage. This means you only pay for what you use, making professional-grade test reporting available to teams of all sizes, not just those with large budgets.

The free tier includes all core features—real-time results, analytics, integrations, and centralized debugging—so you can evaluate the platform fully before upgrading.

Common Playwright Reporting Challenges and Solutions

Even with good tooling, teams encounter specific challenges with test reporting. Here's how to address them:

Challenge: Report Overload with Large Test Suites

When you have hundreds or thousands of tests, reports become overwhelming. Finding the relevant failure among hundreds of passed tests is like finding a needle in a haystack.

Solutions:

- Use filters aggressively—filter by status, project, or tag

- Organize tests into logical groups (by feature, module, or priority)

- Configure reporters to highlight failures prominently

- Consider summary views that show only failed tests first

Challenge: Flaky Tests Polluting Reports

Flaky tests create noise. When the same test passes and fails intermittently, it's hard to know if a failure represents a real issue or random flakiness.

Solutions:

- Configure retries in Playwright:

retries: 2 - Track flakiness over time with historical data

- Quarantine known flaky tests with tags

- Use tools that automatically detect flakiness patterns

Challenge: Slow Report Generation

Generating detailed reports with screenshots, videos, and traces can slow down CI pipelines significantly.

Solutions:

- Capture artifacts only on failure:

screenshot: 'only-on-failure' - Use trace recording strategically:

trace: 'on-first-retry' - Generate lightweight reports (JSON, JUnit) in CI, detailed reports (HTML) on demand

- Compress and parallelize artifact uploads

Challenge: Cross-Environment Inconsistencies

Tests behave differently across browsers, operating systems, and environments. Reports that don't show this context make debugging difficult.

Solutions:

- Include environment metadata in reports (browser, OS, viewport)

- Use Playwright projects to organize by environment

- Tag tests by target environment

- Compare results across environments in a centralized dashboard

Challenge: No Actionable Insights

Raw pass/fail counts don't tell you what to do next. Teams need insights, not just data.

Solutions:

- Track trends over time, not just current status

- Identify the most frequently failing tests

- Measure test duration trends

- Focus on metrics that drive decisions: flakiness rate, mean time to fix, test coverage

Choosing the Right Reporting Strategy

There's no one-size-fits-all approach to test reporting. Your choice depends on several factors:

For Solo Developers or Small Projects

Playwright's built-in HTML reporter often suffices:

- Low overhead

- No additional services

- Familiar interface

Consider upgrading when you need historical data or work across multiple machines.

For Growing Teams

The pain points typically emerge when:

- Multiple people run tests and need shared visibility

- CI test failures need quick investigation

- Flaky tests start wasting significant time

This is when centralized reporting tools provide clear value.

For Established Test Suites

Teams with mature test automation benefit from:

- Historical trend analysis

- Flakiness management

- Integration with development workflows

- Team collaboration features

Investing in proper tooling pays for itself in reduced investigation time and improved quality visibility.

Evaluation Criteria

When choosing a reporting solution, consider:

- Setup complexity: How quickly can you get started?

- Maintenance burden: Does it require infrastructure you need to manage?

- Feature fit: Does it solve your actual problems?

- Pricing model: Is it sustainable for your team size?

- Integration depth: Does it work with your existing tools?

Best Practices for Playwright Test Reporting

Regardless of which tools you use, certain practices improve reporting effectiveness.

Meaningful Test Names

Reports are only useful if you can understand what failed:

// Hard to understand in reports

test('test1', async ({ page }) => { ... });

// Clear and scannable

test('user can complete checkout with valid credit card', async ({ page }) => { ... });

Appropriate Artifact Collection

Configure artifacts based on debugging needs:

export default defineConfig({

use: {

screenshot: 'only-on-failure', // Save storage, capture what matters

video: 'retain-on-failure', // Videos only for failures

trace: 'on-first-retry', // Traces for debugging flaky tests

},

});

Test Annotations

Use annotations to add metadata to reports. For more information on test annotations, see Playwright's test annotations documentation:

test('checkout flow', async ({ page }) => {

test.info().annotations.push({

type: 'priority',

description: 'critical-path'

});

// test implementation

});

Use Soft Assertions

Standard assertions stop the test execution immediately upon failure, hiding subsequent errors. Soft assertions compile all failures in a single report, giving you a complete picture of what's broken in a single run.

test('user profile', async ({ page }) => {

// These will all run, even if one fails

await expect.soft(page.getByTestId('name')).toHaveText('John');

await expect.soft(page.getByTestId('role')).toHaveText('Admin');

await expect.soft(page.getByTestId('status')).toHaveText('Active');

});

Consistent Test Organization

Group tests logically so reports are navigable:

tests/

auth/

login.spec.ts

logout.spec.ts

cart/

add-item.spec.ts

checkout.spec.ts

Regular Report Review

Make test reports part of your workflow:

- Review nightly run results each morning

- Check trends weekly in team meetings

- Address flaky tests before they accumulate

Conclusion

Playwright test reports are essential for understanding and improving your test automation efforts. While Playwright's built-in reporters provide a solid foundation, growing teams quickly encounter limitations around historical data, centralization, and collaboration.

The good news is that the ecosystem offers solutions for every need and budget. Focused SaaS solutions like NeetoPlaydash can help you find the right fit for your team.

For more Playwright resources, check out the official Playwright documentation, Playwright GitHub repository, and the Playwright community.

The key is matching your reporting strategy to your actual needs. Start with built-in reporters, recognize when their limitations are costing you time, and invest in better tooling when the value is clear.

Effective test reporting isn't just about seeing pass/fail counts—it's about understanding your test suite's behavior, catching problems early, and continuously improving quality. With the right approach, test reports become a powerful tool for building better software.

Frequently Asked Questions

How do I generate an HTML report in Playwright?

Configure the HTML reporter in your playwright.config.ts:

export default defineConfig({

reporter: [['html', { outputFolder: 'playwright-report' }]],

});

After running tests, view the report with npx playwright show-report.

Can I use multiple reporters at once?

Yes. Playwright supports multiple reporters simultaneously:

export default defineConfig({

reporter: [

['list'],

['json', { outputFile: 'results.json' }],

['html'],

],

});

How do I share Playwright test reports with my team?

Built-in reports are local files. For sharing, you can:

- Upload the

playwright-reportfolder to a web server - Use CI artifact storage with public links

- Adopt a centralized reporting tool like NeetoPlaydash that provides shareable URLs

What's the difference between traces and reports?

Reports provide a summary of test results—what passed, failed, and how long tests took.

Traces are detailed recordings of individual test runs, including DOM snapshots, network requests, and action timelines. They're used for debugging specific failures.

How can I detect flaky tests in Playwright?

Playwright doesn't have built-in flakiness detection. You can:

- Configure retries and look for tests that pass on retry

- Use third-party tools that analyze historical results

- Use reporting platforms like NeetoPlaydash that automatically identify flaky patterns

How do I report Playwright tests in GitHub Actions?

Add the HTML reporter and upload artifacts. Note that when using --reporter=json,html in the CLI, the JSON output goes to stdout by default. To save the JSON report, configure an output file using the PLAYWRIGHT_JSON_OUTPUT_NAME environment variable:

- run: PLAYWRIGHT_JSON_OUTPUT_NAME=results.json npx playwright test --reporter=json,html

- uses: actions/upload-artifact@v4

with:

name: playwright-report

path: playwright-report/

For better integration, use tools with native GitHub support that post results directly to PRs.

What makes NeetoPlaydash different from other reporting tools?

NeetoPlaydash is focused specifically on Playwright, with:

- Quick 5-minute setup

- Real-time results (not just post-run reports)

- Affordable pricing without per-seat costs

- GitHub and Slack integration

- Centralized dashboard for team visibility

It's designed to solve common Playwright reporting problems without the complexity or cost of enterprise-focused alternatives.

How do I capture screenshots and videos in Playwright reports?

Configure artifact capture in your Playwright config:

export default defineConfig({

use: {

screenshot: 'only-on-failure', // 'on' | 'off' | 'only-on-failure' | 'on-first-failure'

video: 'retain-on-failure', // 'on' | 'off' | 'retain-on-failure' | 'on-first-retry'

trace: 'on-first-retry', // 'on' | 'off' | 'on-first-retry' | 'retain-on-failure' | 'on-all-retries' | 'retain-on-first-failure'

},

});

These artifacts appear in the HTML report and are invaluable for debugging failed tests.

Can I create custom reporters in Playwright?

Yes. Playwright supports custom reporters by implementing a JavaScript class with lifecycle methods:

class MyReporter {

onBegin(config, suite) { /* Called when run starts */ }

onTestBegin(test) { /* Called when each test starts */ }

onTestEnd(test, result) { /* Called when each test ends */ }

onEnd(result) { /* Called when run ends */ }

}

module.exports = MyReporter;

Register it in your config: reporter: [['./my-reporter.js']]

Why are my Playwright reports so large?

Large reports typically come from:

- Videos enabled for all tests: Use

video: 'retain-on-failure'instead ofvideo: 'on' - Full traces always recorded: Use

trace: 'on-first-retry'instead oftrace: 'on' - Screenshots for all tests: Use

screenshot: 'only-on-failure'

These settings reduce artifact size while preserving debugging capability for failures.

How can I view Playwright reports in CI/CD?

Upload the report as a CI artifact:

- uses: actions/upload-artifact@v4

if: always()

with:

name: playwright-report

path: playwright-report/

For easier access, use a centralized reporting tool like NeetoPlaydash that provides direct links to results without artifact downloads.

Pronto para começar?

Vamos começar agora.